Seminar: Digital Ethics and Politics

How should people in universities learn and produce knowledge in the age of generative AI? Critical thinking about technology and knowledge in society gives the first elements of response.

Knowing AI: Generative AI in university research and education

When students returned to classrooms at the beginning of the Spring 2023 semester, discussions were already underway about the profound effects that a new publicly available technology of generative text chatbots – and in particular OpenAI’s ChatGPT – could have for learning and research.

At my university, ETH Zurich, faculty, administrators and students began to ask important questions like: What kind of tool is this? How can and should it be accommodated into our workspaces, our ways of caring for one another, our forms of knowledge-making? How do we as an academic community preserve what we see to be important like scientific integrity or democratic oversight of technology? What skills are needed to work with generative AI well and responsibly? What do we need to know? Who needs to know it? Who needs to adapt and how?

Subsumed in pedagogical projects like digital literacy or critical thinking, these diverse questions converge on an issue that is well-familiar to the interpretive disciplines that study the history, philosophy, and politics of knowledge. The central question AI poses and which we pose about AI in the university is: What does it mean to know well with AI? Here “with” refers both to AI as an instrument of knowledge-production and as a defining element in the broader conditions of knowledge in society.

WHAT DOES IT MEAN TO KNOW WELL WITH AI?

To respond to this question, a community of students and teachers in the new ETH Zurich course Digital Ethics and Politics began a study to investigate the role of generative AI in research and learning. We worked through the semester to articulate and develop our perspectives. Each perspective identified an issue of ethical significance for the student’s learning trajectory, their discipline, and their university in a world with generative AI. Students drew upon frameworks and methods from the fields of Science, Technology and Society (STS) and from constructive historical thinking to analyze the issue. We then gathered the individual perspectives into a report that we presented to the ETH Rektor in the summer of 2023. Importantly, the study brought together perspectives of students from diverse backgrounds and disciplines, including computer science, data science, mathematics, history and philosophy of knowledge, material science, technology and public policy. The essays in this publication offer a vibrant selection of this wider work, presented by students who wished to take their perspectives – and the collective project – to a wider audience.

Our study highlights three essential components of what it means to know with AI.

TAKE TOOLS SERIOUSLY

First, we argue, it is necessary to take seriously the idea that text-generating AI technologies are tools. What kinds of tools are these? How are they similar or different from other tools people use or have used in the past for learning, thinking, and creating? Our central point is that the tools people make are never neutral, but emerge from pre-existing social structures, interests, and relations. Symmetrically, tools actively shape the ways of thinking and knowing of the individuals and communities that use them.

Fanny Tockner observes how the tool metaphor features in the vocabulary of ETH’s response to ChatGPT and argues that this metaphor reinforces an idea of technology as neutral and value-free. This becomes problematic when a technology such as ChatGPT, through its design and its biases, comes with its own politics. Hence Tockner favors thinking of ChatGPT with a more interactive concept of tools that inform and extend the user’s perception of reality as they use it.

THE POWER OF INSTITUTIONAL VALUES

Taking AI as a tool seriously quickly brings to the fore the role of the tool’s designers. The second insight of the study is that the institutional contexts that helped to shape generative AI matter for the kind of knowledge it can support, how that knowledge is produced, by whom and for whom. To properly integrate generative AI into the specific culture and mission of ETH Zürich requires a symmetrical analysis of the institutional contexts of the producers and recipients or adopters of the technology. Symmetrical analysis makes it possible to consider the relationship between the environment in which the tool was developed and in which it is being integrated. Our study identifies a similarity between OpenAI and ETH’s mission to advance the “common good” through developments of new knowledge and technologies. Yet it also points to salient differences in how the institutions live-out these values and to whom they are beholden. ETH’s responsibility to the wellbeing of Swiss society and its students requires it to consider the long-term and broader societal consequences of the technological innovations that it contributes to and the professionals it trains from the start – beginning with what and how students learn in the classroom.

In her essay, Sara Kinell asks about the ethical implications of how ChatGPT relates to these purposes and values of higher education. She observes that the power of ChatGPT to assemble a plurality of perspectives into singular outputs that also risk entrenching historical biases poses a challenge to the ETH’s values of openness and diversity. To compensate for this tendency of AI chatbots to foreclose diverse voices, Kinell calls for ETH to actively support its community to develop new reservoirs of critical and constructive thinking.

We argue that it is important to ensure that people with a diverse range of perspectives can contribute to both the development of AI technologies and help shape their integration into educational communities. This point is of immediate importance for how ETH supports the introduction of this new tool and is significant for longer-term educational reform. In response to the advent of generative AI, universities should focus on educational competencies – in computing as well as in the human contexts and ethics of technology – necessary for students to contribute to the development of future AI technologies in a plurality of roles – as designers, users, and citizens.

DIVERSE EPISTEMIC CULTURES

The third insight of the study is that the situated contexts of specific disciplines matter for what it means to know with AI. Generative AI enters into diverse intellectual communities each with its own established epistemic culture. Epistemic culture includes ways of asking questions, norms of collaboration and authorship, theoretical commitments, standards of evidence, and disciplined research practices. Beginning with these situated points of view, the authors explore adaptations that students, researchers, and ETH as a whole can make to their research and learning practices to integrate chatbots effectively, responsibly, and with awareness about their interaction with human users in each field.

In her essay, Lisa Thomann compares the language generated by AI with the characteristics associated with academic language. She argues that while ChatGPT is able to imitate grammar or style, it is unable to recognize biases and ensure transparency in the way that academic language is expected to. The use of AI in academic text production, she concludes, will depend on how scientists work with and compensate for these limitations within the writing repertoires and norms in their own field.

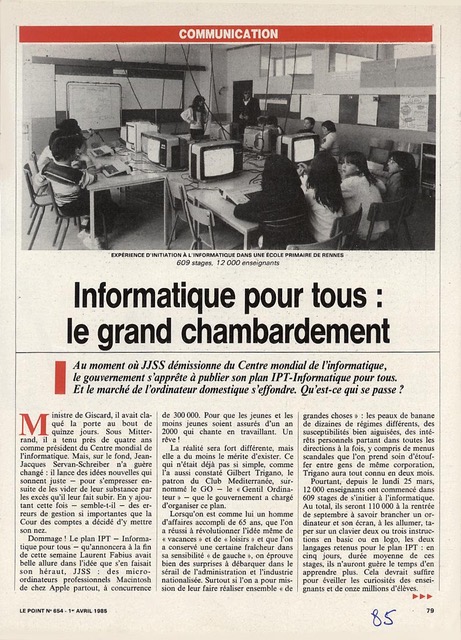

Abbildung 1: Today’s questions about AI and learning echo those of educators, students, and government leaders in the 1980s when learning with computers was introduced into classrooms around the world.

BEYOND ACCEPTANCE OR DISMISSAL: AWARENESS AND COLLECTIVE DEBATE ABOUT WHAT'S CHANGING AND HOW IT MATTERS

Attending to situated conditions of knowledge-production makes us wary of both the tendency to unquestioningly accept chatbots as engines of efficiency in learning, teaching, and research and to hastily dismiss them in fear of unacceptable risks. Instead, we need opportunities to learn about AI in education together across disciplines. Cross-disciplinary workshops, discussions or collective development of best-practice recommendations with examples from specific epistemic cultures can help students, teachers and researchers to assess our transforming educational and research environment and discover our agencies to shape it.

Beyond these insights, our collective aim with this study is to contribute to and develop the conversation at ETH about issues at the intersection of technology and society that affect our community and world. In the process of working on this project, we discovered that the main challenge is not to do the analysis and write the content, but to invent the appropriate voice and form that will invite and enable others to recognize their interest in the issue and join the conversation.

Abbildungsverzeichnis

Abbildung 1: Daniel Garric: „Informatique pour tous: le grand chambardement“, Le Point no. 654, 1985, April 1st, pp. 79–81.

Literaturverzeichnis

Seminar

A version of this text was produced as part of a collective project called “Text-Generating AI in Learning and Research: Student Perspectives,” as part of the course “Digital Ethics and Politics” at ETH Zurich in the Spring 2023 semester.

Redaktionell betreut von

Ines Barner, Niklaus Schneider and Christina Tuggener

Abbildungsverzeichnis

Abbildung 1: Daniel Garric: „Informatique pour tous: le grand chambardement“, Le Point no. 654, 1985, April 1st, pp. 79–81.

Literaturverzeichnis